SAFEXPLAIN - Building Trustworthy AI for Critical Embedded Systems

Autonomous embedded systems are the invisible intelligence behind many of today’s most advanced technologies, from spacecraft and self-driving cars to medical and industrial equipment.

These systems operate independently inside larger machines, executing safety-critical tasks where even a minor malfunction could have serious consequences.

Because of this, reliability, real-time performance, and safety verification are non-negotiables.

AI models generally behave as black boxes, they mostly learn from data rather than explicit instructions. Their reliability depends on the quality, diversity, and balance of that data. Ensuring that AI solutions remain safe and explainable is one of today’s greatest engineering challenges.

SAFEXPLAIN: safe and explainable AI

The SAFEXPLAIN project was created to bridge the gap between advanced AI solutions and the strict safety culture of mission-critical systems.

Funded by the European Union’s Horizon 2020 program (2022–2025), the project brought together a world-class consortium:

The Barcelona Supercomputing Center (BSC), AIKO, IKERLAN, RISE, NAVINFO Europe, and Exida Development S.R.L.

The aim? Making AI transparent, trustworthy, and certifiable in domains like aerospace, automotive, and railway.

The project reimagined the traditional safety lifecycle by integrating specific development steps. Yet, SAFEXPLAIN did not stop at theory. It developed practical guidelines, libraries, and software tools, then validated them through three real-world case studies, one for each domain.

The space case study: safe autonomy beyond Earth

*Copyright AIKO

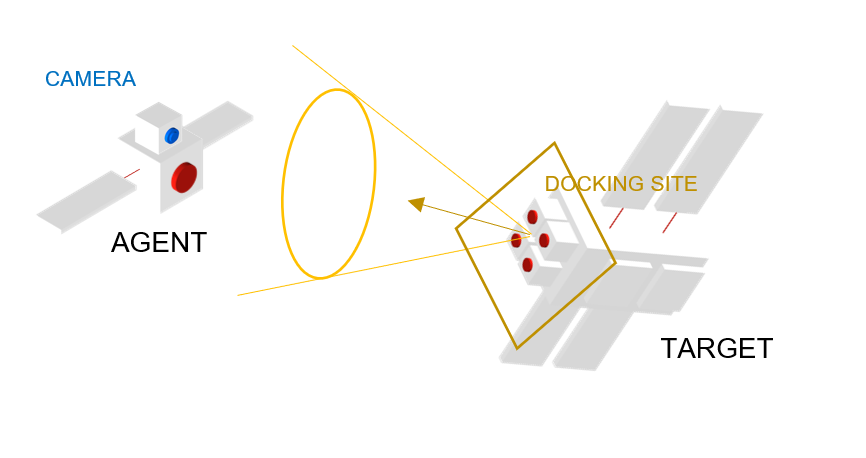

AIKO led the space case study, focusing on a scenario central to the future of orbital operations: a spacecraft autonomously approaching with another satellite.

At the heart of this operation is pose estimation, a key component of the Guidance, Navigation, and Control (GNC) system. It determines the position and orientation of the target spacecraft using visual data.

AI models trained on grayscale camera inputs can perform this estimation autonomously, but doing so safely and explainably requires a robust architecture to meet the targeted Safety Pattern.

Inside the system: layers of safety and trust

The SAFEXPLAIN system was deployed on the NVIDIA Orin AGX platform, a powerful edge computing device commonly used in robotics and control applications.

Its architecture was set to meet the Safety Pattern 2 concept to ensure safe operations, combining redundancy, diagnostics, and supervision.

This design ensured that even under unexpected conditions, such as sensor noise or lag, the system could detect anomalies and act safely.

Live demo: trustworthy AI in action

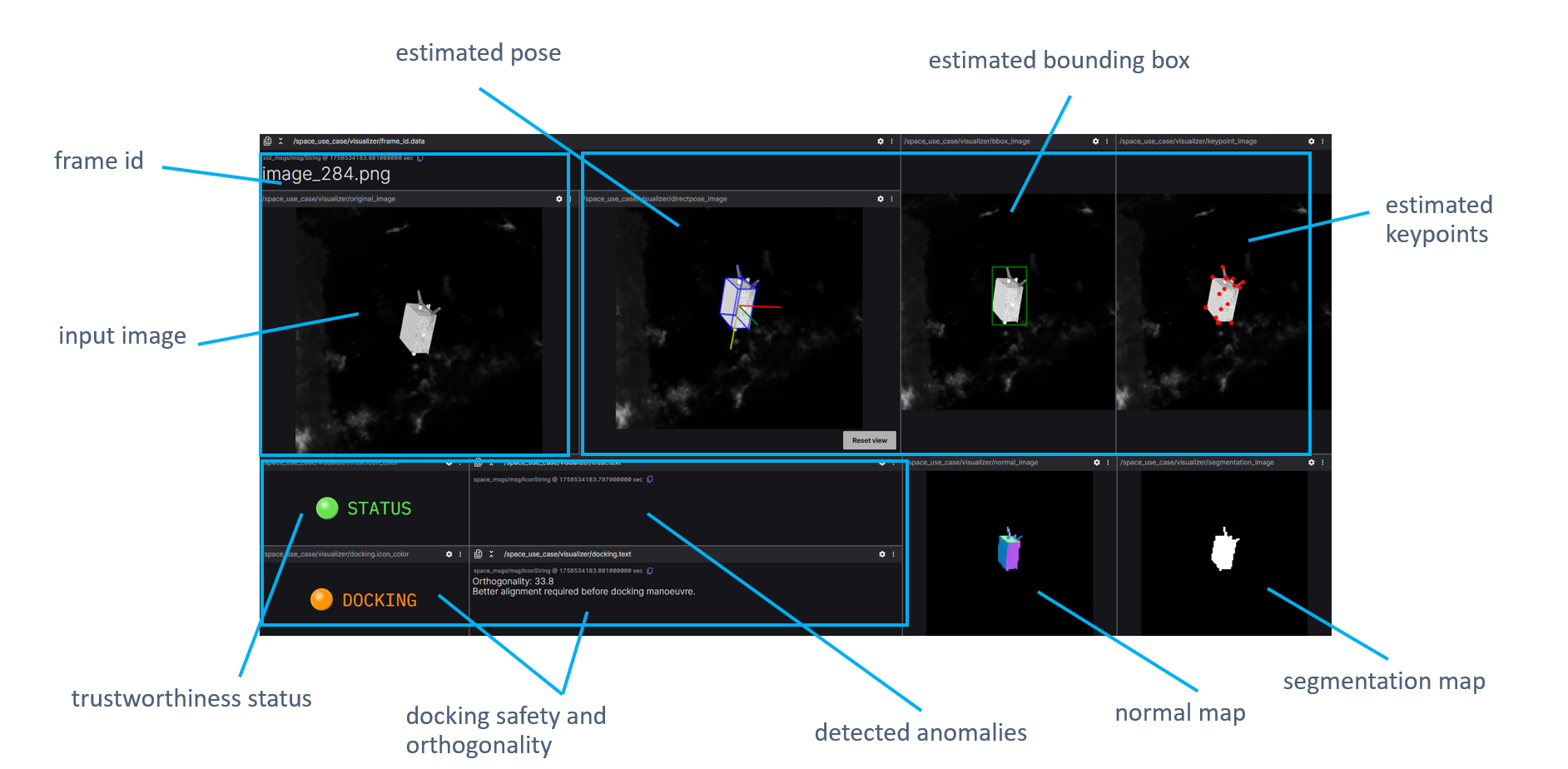

At the “Trustworthy AI in Safety-Critical Systems” event hosted by BSC, SAFEXPLAIN came to life.

In a live demo, we simulated a satellite approaching sequence under anomalous variable conditions: sensor noise, delayed frames, and deliberate model perturbations.

The system’s diagnostics detected each injected anomaly, while the safety control module continuously assessed docking feasibility.

The result? A clear demonstration of explainable AI in action, capable of maintaining safety and performance in dynamic environments.

*Copyright AIKO

A new era of safe autonomy

As AI-driven solutions keep gaining traction, the question should no longer be “Can we use AI safely?”, but rather “How can we make AI inherently safe?”

Projects like SAFEXPLAIN are answering that call. They prove that explainability and safety are not obstacles to innovation but foundations of reliable autonomy.

For AIKO, SAFEXPLAIN not only marked a major milestone, but it added another foundational layer to the work we have been carrying out. This directly contributes to our vision: a fully AI-driven Guidance, Navigation, and Control system capable of autonomous mapping, proximity maneuvers, multi-sensor fusion, and trajectory planning.

The takeaway is clear: intelligent and explainable autonomy in space is a deployable reality. The next generation of autonomous spacecraft will not only think for themselves but do so transparently, safely, and reliably.

We dive deep into the details in our AIKO lab latest post: https://bit.ly/lab_safexplain_aiko

Watch the demo: https://bit.ly/demo_safexplain_aiko